Historical Methods for Prioritization

1. Historical Methods for Prioritization

Historically, organizations have relied on several standardized frameworks to prioritize vulnerabilities, with varying degrees of success. The most common methods include the Common Vulnerability Scoring System (CVSS), the Exploit Prediction Scoring System (EPSS), and Known Exploited Vulnerabilities (KEV). Each of these methods serves a distinct purpose but also has inherent limitations that can hinder effective vulnerability prioritization as part of an overall vulnerability management program.

1.1 Common Vulnerability Scoring System (CVSS)

Definition: The Common Vulnerability Scoring System (CVSS) provides a way to capture the principal characteristics of a vulnerability and produce a numerical score reflecting its severity. A numerical score can then be translated into a qualitative representation (such as low, medium, high, and critical) to help organizations properly assess and prioritize vulnerabilities as part of their vulnerability management process.

The Forum of Incident Response and Security Teams (FIRST) was selected by the National Infrastructure Advisory Council (NIAC) to be the custodian of CVSS and has been maintaining it since 2005.

Uses:

- CVSS scores help organizations prioritize vulnerabilities based on their potential impact.

- Security teams often utilize CVSS as part of vulnerability management tools to classify and assess risk levels for vulnerabilities.

The Evolution of CVSS

The Common Vulnerability Scoring System was launched by the National Infrastructure Advisory Council (NIAC) in 2005.

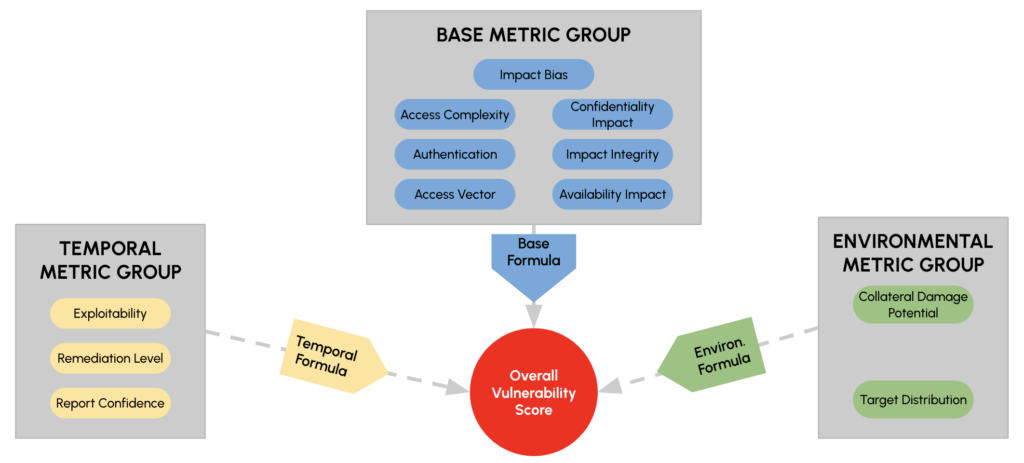

CVSS v1 is not that different from what exists today. A few different metric groups have been added to it with some different metrics underneath, but the overall concept of a base formula, temporal formula, and environmental formula still remain.

CVSS v2 was a fast follower to v1. When CVSS v1 was released to criticism, v2 was released to expand the model. For example, v1 didn’t differentiate between impact to confidentiality, integrity, and availability of a system for a vulnerability where an attacker would get root versus user-level access. CVSS v2 is still being reported in some vulnerability management tools.

CVSSv1 (2005)

- FIRST selected by NIAC to be custodian of CVSS in 2005

CVSSv2 (2007)

- Added granularity for access complexity and access vector

- Moved impact bias metric to environmental metric group

- Differentiated between root and user level access for CIA impact

- Changes to authentication metric

- v2 still was criticized for lack of granularity in metrics and inability to distinguish between vulnerability types and risk profiles

CVSSv3.X (2015/2019)

- Added new metrics to Base vector for User Interaction and Privileges Required

- CIA metrics updated

- Access Complexity and Attack Vector updates

- 3.1 added CVSS Extensions Framework allowing a scoring provider to include additional metrics

ALL of these models always required you to understand the impact if it were to be exploited. But you can only know and prioritize vulnerabilities if you know what the asset is! Or if it even exists!

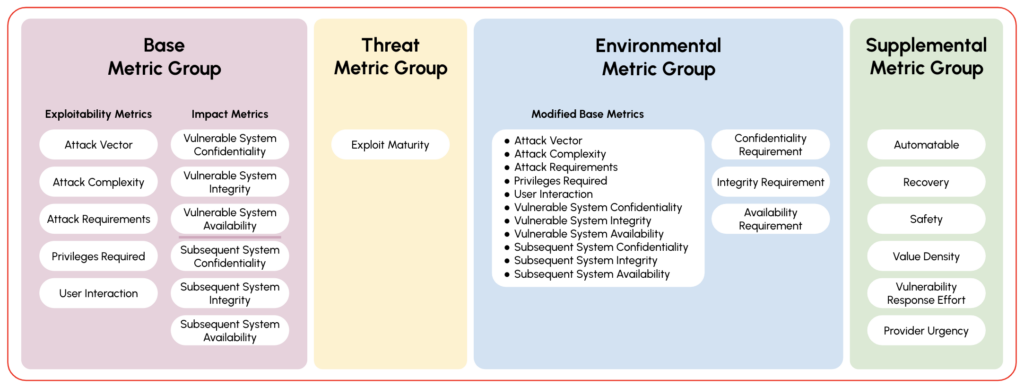

CVSS v4

CVSS v4 which was launched on November 1, 2023 included changes that include:

- Reinforce the concept that CVSS it not just the Base score

- Finer granularity through the addition of new Base metrics and values

- Enhanced disclosure of impact metrics

- Temporal metric group renamed to Threat metric group

- New Supplemental Metric Group to convey additional extrinsic attributes of a vulnerability that do not affect the final CVSS-BTE score

- Additional focus on OT/ICS/Safety

In addition to the change in nomenclature, you can now report on metrics such as the Base metric group (CVSS-B) and Threat metric group (CVSS-BT) base and Environmental metric group (BE) and then the Base + Threat + Environmental metric groups all together (CVSS-BTE). So depending on the terminology you’re seeing associated with CVSS, it’s a compilation of the different metrics getting included in the score.

Limitations with CVSS

- Subjectivity: While CVSS aims to provide an objective scoring system, the initial assessment of vulnerabilities often involves subjective interpretation of the impact and exploitability, leading to inconsistencies.

- Focus on exploitability: CVSS primarily focuses on how a vulnerability can be exploited rather than the actual likelihood of an exploit occurring in a given environment.

- Lack of context: CVSS does not consider environmental factors to prioritize vulnerabilities such as existing security controls, asset criticality, or business impact, which can render its scores less relevant in specific organizational contexts.

1.2 Exploit Prediction Scoring System (EPSS)

Definition: EPSS is a framework designed to estimate the likelihood that a vulnerability will be exploited in the wild. It is based on various risk factors, including the vulnerability’s characteristics and contextual data.

EPSS was launched in 2021, with the current version (v3) released in 2023. It is a daily estimate of the probability of exploitation activity being observed over the next 30 days.

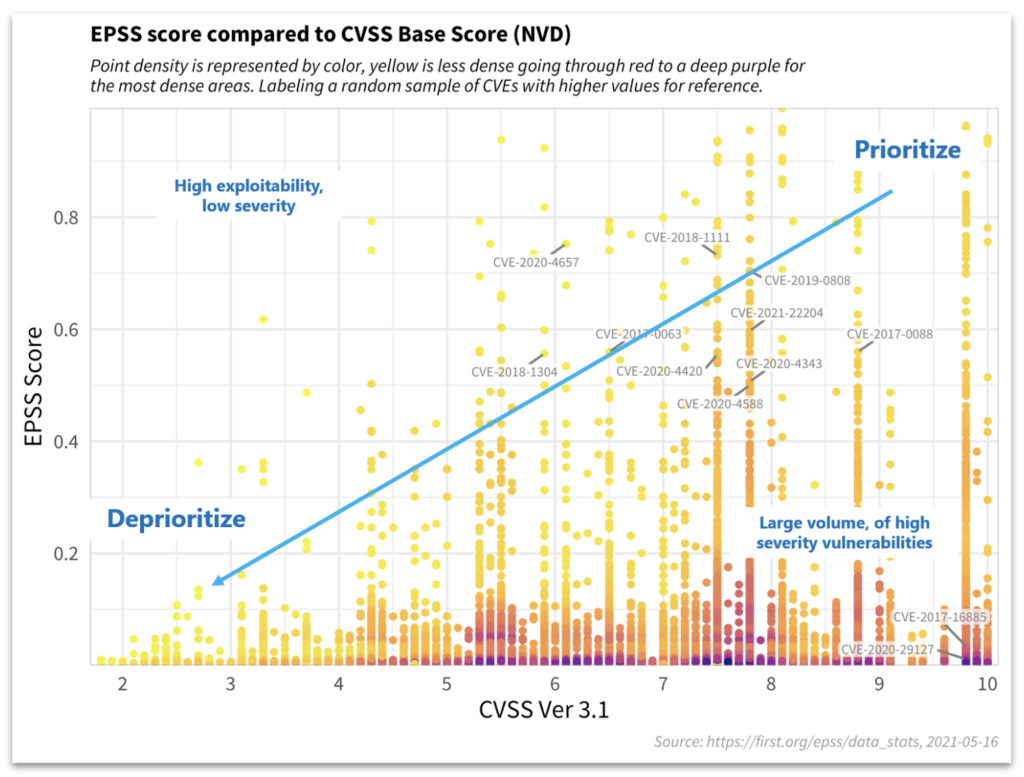

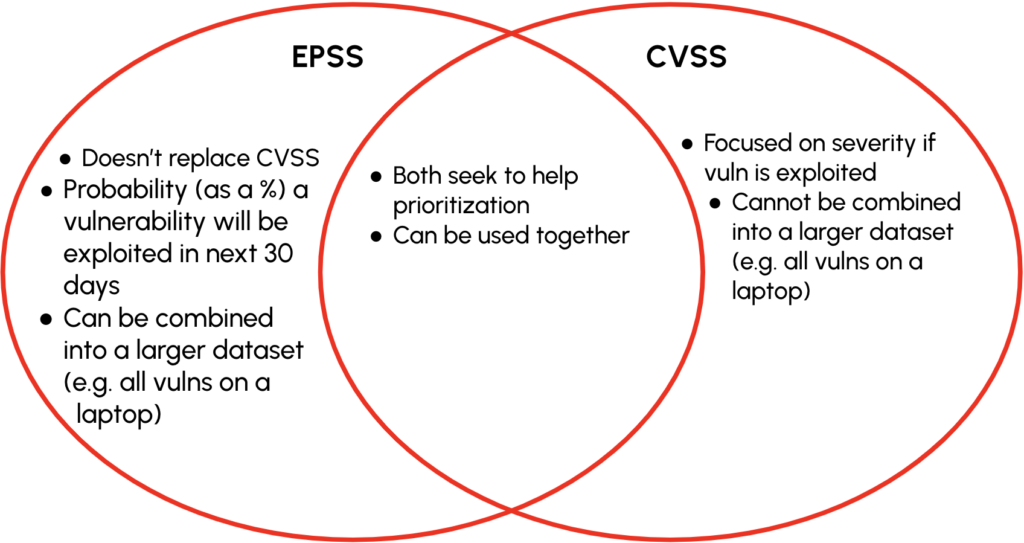

According to FIRST, which also manages EPSS, the system is a “data-driven effort for estimating the likelihood (probability) that a software vulnerability will be exploited in the wild.” EPSS is not a replacement for CVSS, but complements CVSS. EPSS takes into consideration factors such as: the number of reference links associated with a CVE, the market share of the impacted software product, and industries and products that threat actors may be specifically targeting. EPSS does not measure the severity, but does measure exploitability.

Uses:

- EPSS scores help organizations prioritize vulnerabilities by predicting the likelihood of exploitation in the next 30 days. This allows security teams to focus on the most pressing risks.

- EPSS can be combined into a larger dataset (e.g. all vulns on a laptop)

- This scoring system provides actionable insights that complement CVSS scores, enabling a more nuanced understanding of vulnerability risk.

Limitations:

- Data Dependency: EPSS relies heavily on the availability of real-world data regarding exploitation trends. If this data is lacking or not current, the predictive capability of EPSS may be compromised.

- Complexity: Implementing EPSS can be complex, requiring organizations to integrate it into their existing vulnerability management workflows. This may necessitate additional training and resources.

- Dynamic Nature: The threat landscape is constantly evolving, and EPSS scores can change over time making it more difficult to prioritize vulnerabilities. This necessitates regular updates and monitoring, which can be resource-intensive.

1.3 Known Exploited Vulnerabilities (KEV)

Definition: Cybersecurity and Infrastructure Security Agency (CISA) maintains the KEV catalog which is an authoritative source of vulnerabilities that have been exploited in the wild.

Uses:

- The KEV catalog serves as a prioritized list of vulnerabilities that organizations should address immediately. These vulnerabilities have been confirmed to be actively exploited by threat actors.

- Organizations can use the KEV catalog as one of their inputs to prioritize vulnerabilities. This ensures remediation efforts are focused on the most critical vulnerabilities that pose an imminent threat.

Limitations:

- Static Nature: KEV lists may not include all vulnerabilities that pose a risk, particularly new or emerging vulnerabilities that have not yet been documented. But companies such as VulnCheck are providing next-generation exploit and vulnerability intelligence.

- Limited Scope: The focus on actively exploited vulnerabilities may lead organizations to overlook exposures when trying to prioritize vulnerabilities. While not currently exploited, vulnerabilities can still be critical based on their specific environments and risk profiles.

- Dependency on Reporting: Organizations may be dependent on external reporting and updates to KEV, which can result in delays in addressing emerging threats.